Last updated 1 year ago

Last updated 1 year agoAs AI becomes more integrated into everyday tools and services, many developers and enthusiasts are interested in using AI models like OpenAI's GPT for tasks such as text generation, chatbots, and more. However, accessing OpenAI's models requires an internet connection to their cloud servers, which isn't always convenient or possible for certain applications. What if you need to run OpenAI-like models offline?

In this post, we will explore how to use OpenAI-compatible models offline, including options like LLaMA and Mistral, which allow you to run powerful language models on your local machine or server without the need for constant internet access.

There are several reasons why running AI models offline is advantageous:

Now, let’s dive into how you can set up and use OpenAI alternatives offline.

To use AI offline, follow these steps:

Step 1. Install Ollama, which can be found at the following link:

https://ollama.com/download

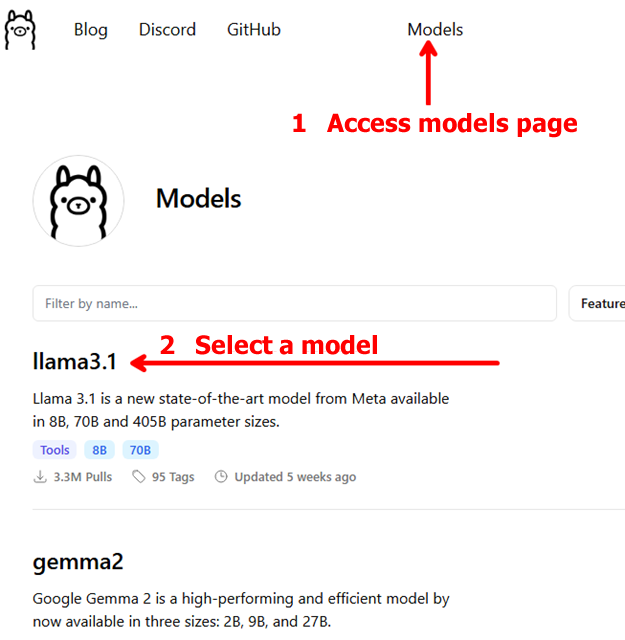

Step 2. Choose the desired model. This can be done at the following link:

https://ollama.com/library

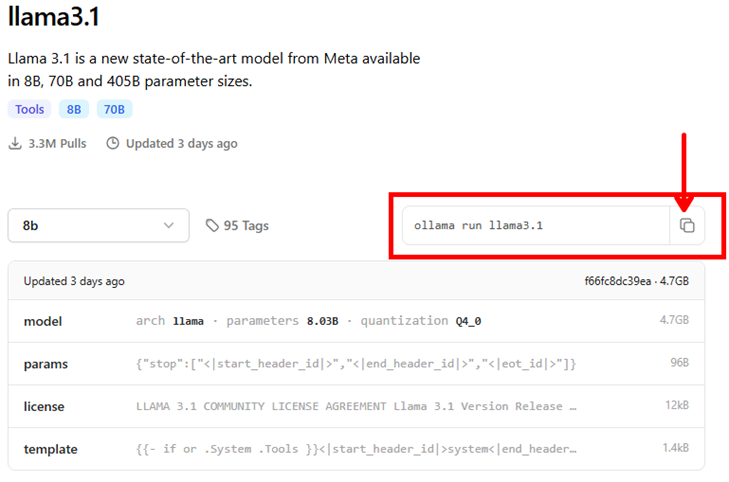

Step 3. Open command prompt (cmd) and run the command “ollama run <name_model>” and wait for the installation to finish. This command will automatically download the required model files.

or

or

💡 Where are models stored?

Windows: C:\Users\UserName\.ollama\models

Step 4. Install AI Requester, which can be found at the following link: https://vovsoft.com/software/ai-requester/

This kind of software can make it easy for users to switch between offline and online modes, providing flexibility depending on their needs.

Step 5. Use the View menu and open "OpenAI Settings". Edit "API URL" to

That's all! You can now chat with any OpenAI-compatible model locally.

Responses (3)

Responses (3)